Understanding Kubernetes Architecture, Installation and the world of Orchestration

Kubernetes has become the go-to container orchestration platform for managing and scaling containerized applications. In this article, we will explore the architecture of Kubernetes and various ways to install and set up a Kubernetes cluster. Whether you are a beginner learning Kubernetes or an experienced professional looking for production-level deployment, we will cover all the essential aspects to help you get started.

Introduction to Kubernetes

Kubernetes, often referred to as "K8s," is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. The Cloud Native Computing Foundation (CNCF) now maintains it after Google initially built it. With Kubernetes, you can easily manage complex applications that are composed of multiple containers, running across a cluster of machines.

Kubernetes Architecture

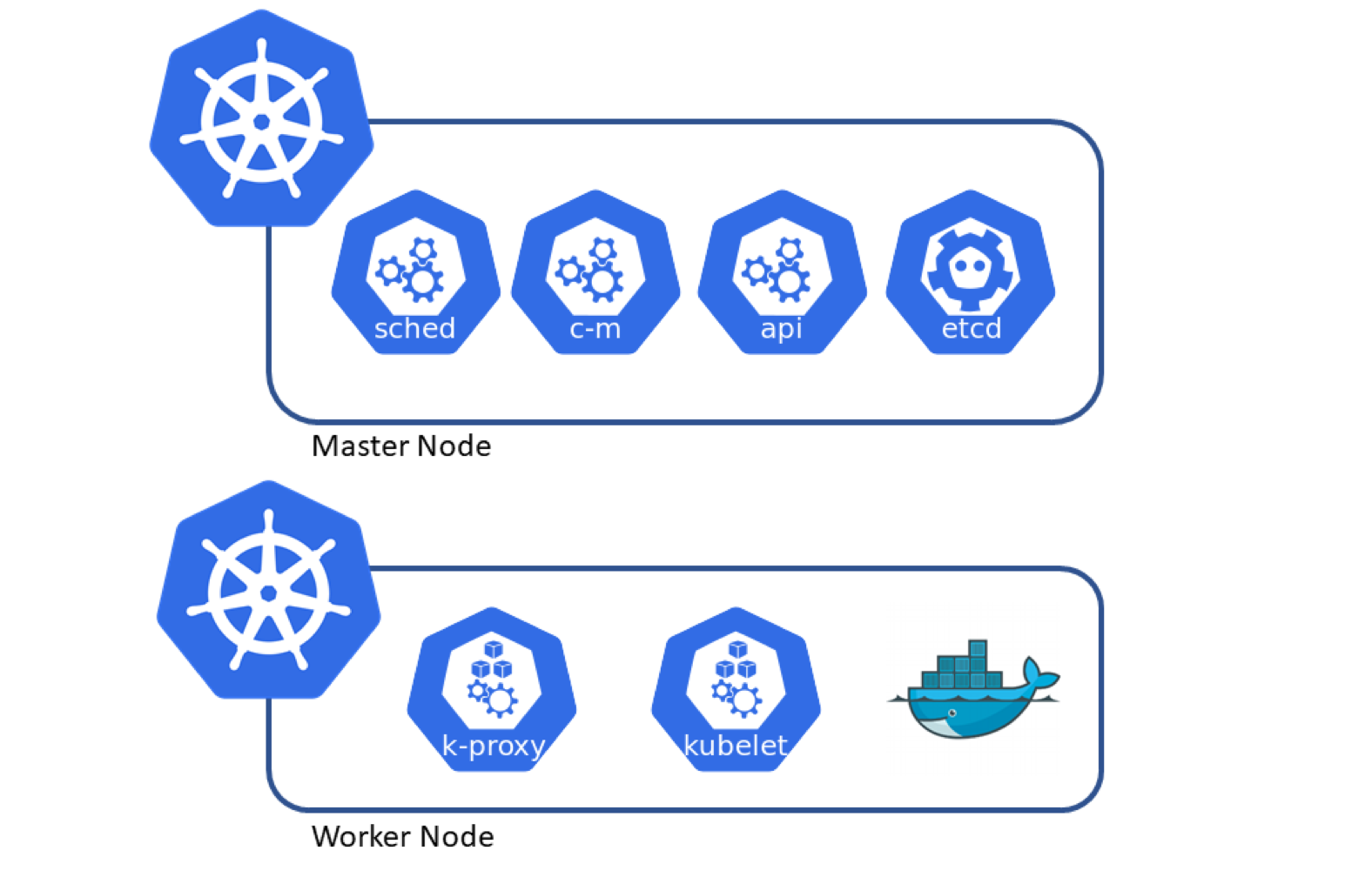

To understand how Kubernetes works, let's take a closer look at its architecture. Kubernetes follows a master-worker architecture, where the master node, also known as the control plane, manages the entire cluster, while the worker nodes run the actual containers.

Control Plane Components

The control plane consists of several components that work together to manage and control the cluster. These components include the following:

kube-apiserver: The kube-apiserver acts as the front-end of the control plane, exposing the Kubernetes API. It handles requests from various sources and ensures the desired state of the cluster is maintained.

kube-controller-manager: The kube-controller-manager runs various controllers that handle different aspects of the cluster's functionality. These controllers include the node controller, which monitors the status of worker nodes, and the replication controller, which manages the desired number of pods.

kube-scheduler: The kube-scheduler is responsible for scheduling pods on worker nodes. It considers factors like resource availability and constraints to determine the optimal placement of pods.

etcd: etcd is a distributed key-value store that stores the cluster's configuration and state. It serves as the cluster's data store and provides consistency and fault tolerance.

Worker Nodes

Worker nodes, also known as worker or worker machines, are responsible for running the actual containers. Each worker node runs the following components:

kubelet: The kubelet is an agent that runs on each worker node and communicates with the control plane. It takes care of starting, stopping, and monitoring containers on the node.

kube-proxy: The kube-proxy is a network proxy that runs on each worker node. It handles network routing and load balancing for services running on the node.

Installation Types

When it comes to installing Kubernetes, you have several options to choose from based on your requirements and expertise. Let's explore the different installation types available.

Local Machine Deployment

If you are learning Kubernetes or want to set up a development environment, deploying Kubernetes on a local machine is a great option. You can download Kubernetes and use various tools supported by the Kubernetes community or the ecosystem to set up a local cluster. These tools provide an easy way to experiment and learn without the need for dedicated infrastructure. There is a fantastic article from RedHat, Start learning Kubernetes from your local machine which details how you can start learning Kubernetes by doing a local machine deployment.

Cloud Deployment

For production-level deployments, deploying Kubernetes on a cloud platform offers scalability, flexibility, and managed services. Leading cloud providers like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure provide managed Kubernetes services that abstract away the underlying infrastructure complexities. These services handle control plane management, allowing you to focus on deploying and managing your applications.

Picture Credits: translucentcomputing.com

Datacenter Deployment

If you prefer to manage your own infrastructure, you can deploy Kubernetes in your own data center. This approach gives you complete control over the cluster and allows for customization based on your specific requirements. You can use tools like kubeadm, the officially supported tool for deploying Kubernetes, to set up and configure your cluster.

Picture Credits: Atlassian

Containerization of Kubernetes Components

Kubernetes components, including the control plane components like kube-apiserver and kube-scheduler, can be deployed as container images within the cluster. It is highly recommended to run these components as container images whenever possible, as it allows Kubernetes to manage and scale them efficiently.

However, there are certain components, like the kubelet, that cannot be containerized. The kubelet runs on each worker node and is responsible for managing containers on that node. It cannot be included in the category of components running as container images.

Managed Kubernetes Services

If managing a Kubernetes cluster yourself seems overwhelming, you can opt for a managed Kubernetes service. These services provide turnkey solutions for running Kubernetes clusters without the need for manual setup and maintenance. Certified platforms offered by cloud providers or other standardized solutions across various cloud and bare metal environments are available. These services handle the underlying infrastructure, including the control plane, leaving you to focus on deploying and managing your applications.

Setting up a Learning Environment

If you are new to Kubernetes and want to learn the basics, it's essential to set up a proper learning environment. The Kubernetes community provides several tools and resources to help you get started.

Tools Supported by the Kubernetes Community

Utilizing the resources provided by the Kubernetes community is advised when learning the technology. With the help of these tools, you can quickly and easily create a Kubernetes cluster on your local workstation for testing and research. Minikube, kind, and k3s are a few well-liked utilities. By making the process of establishing a single-node or multi-node cluster easier, these tools let you investigate the features and ideas of Kubernetes.

Setting up a Production Environment

When it comes to setting up a Kubernetes cluster for production, several considerations come into play. You need to evaluate the aspects of operating a cluster that you want to manage yourself and those you prefer to hand off to a provider.

Using kubeadm

If you decide to manage the Kubernetes cluster yourself, the officially supported tool for deploying Kubernetes is kubeadm. Kubeadm simplifies the process of setting up a production-level cluster by automating several tasks, such as bootstrapping the control plane and joining worker nodes to the cluster. It provides a standardized way to configure and maintain a Kubernetes cluster, ensuring best practices are followed.

Next Steps

Now that you have a basic understanding of Kubernetes architecture and the different ways to install and set up a Kubernetes cluster, you are ready to take the next steps. Here are some things you can do:

Download Kubernetes and explore its features.

Install tools like kubectl, the command-line interface for interacting with Kubernetes clusters.

Choose a container runtime for your cluster, such as Docker or containerd.

Learn about best practices for setting up and managing a Kubernetes cluster.

By diving deeper into Kubernetes, you will unlock its full potential for managing and scaling your containerized applications.

Remember, Kubernetes is a robust platform that empowers developers and operators to efficiently run their applications. With its flexible architecture and various installation options, you can tailor Kubernetes to meet your specific needs, whether you are running a small development cluster or a large-scale production environment.

Happy Kuberneting!