Technology is evolving so rapidly that if we look at the cloud providers today, we may get at least 3 services per provider. After doing the hustle with AWS and Azure, it is now time to explore GCP (aka Google Cloud Platform).

Introduction

Stateless containers can be operated in a fully managed environment using Cloud Run. Because it is based on the open-source Knative, you may use Cloud Run for Anthos to run your containers in your Google Kubernetes Engine cluster or fully manage them with Cloud Run.

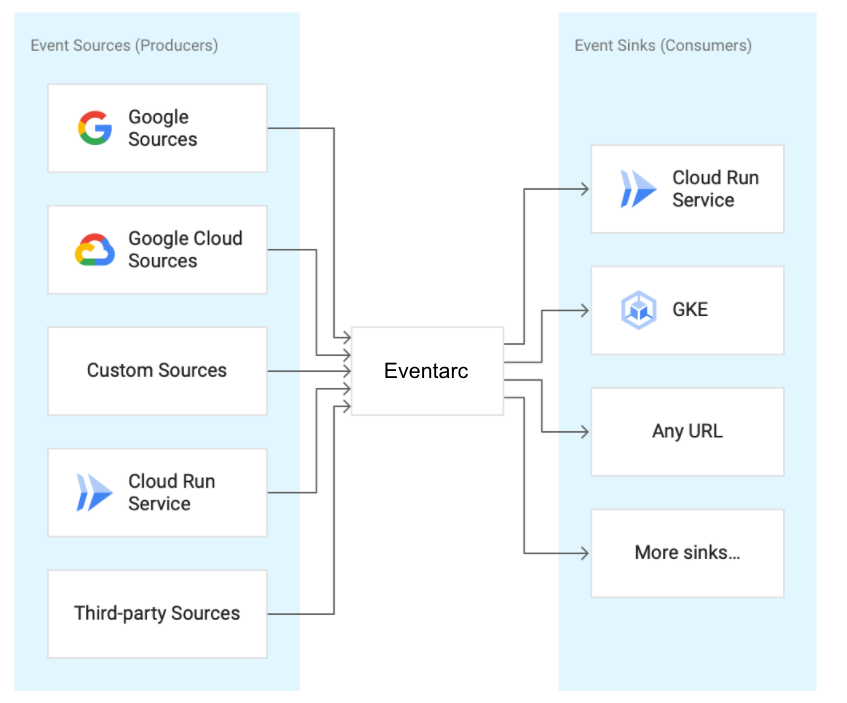

Connecting different services (Cloud Run, Cloud Functions, Workflows) with events from many sources is made simple by Eventarc. It enables you to create loosely linked, distributed microservices within event-driven architectures. Enhancing developer agility and application resilience, it also handles event ingestion, delivery, security, authorization, and error-handling for you.

You will become familiar with Eventarc in this codelab. More precisely, you will use Eventarc to listen for events from Pub/Sub, Cloud Storage, and Cloud Audit Logs and then send those events to a Cloud Run service.

Eventarc Vision

The goal of Eventarc is to transport events to Google Cloud event destinations from a variety of Google, Google Cloud, and third-party event sources.

Image Source: https://codelabs.developers.google.com/codelabs/cloud-run-events?authuser=1#1

Let me tell you about these blocks:

Google Sources

Event sources that are controlled by Google, including Android Management, Hangouts, Gmail, and more.

Google Cloud Sources

Event sources that Google Cloud controls.

Custom Sources

Sources of events that are produced by end users and are not held by Google

3rd Party Sources

Sources of events that are neither created by customers nor controlled by Google. This contains well-known event sources owned and maintained by partners and third-party providers, such as Check Point CloudGuard, Datadog, ForgeRock, Lacework, etc.

To enable cross-service interoperability, events are normalized to the CloudEvents v1.0 standard. Interoperability between services, platforms, and systems is made possible by CloudEvents, an open specification that is vendor-neutral and describes event data in standard formats.

Setup and requirements

I am doing this all by self, so I started with the account and new project creation.

Creating a new account for google cloud

Head to the Google Cloud console using: https://console.cloud.google.com/

The next thing is to create a new project. So Click on the "My first Project" which is located right next to the Google Cloud on the left side top corner and provide a project name. Click on create.

Once the project is created, Click on the select project from the option shown

Start the cloudshell

Navigate to the cloudshell using the option from the console shown below:

You should be able to see the screen below with the terminal open

When the shell is connected, you will see something like this:

We need to connect to the project first so that we can start working on the project infrastructure. So execute the commands below:

PROJECT_ID=your-project-id

gcloud config set project $PROJECT_ID

Deploy a Cloud Run service

Since we are looking at a PUBSUB architecture, there should be a sender and a receiver. We will set up the Cloud Run service to receive the events. First, let us deploy, Cloud Run's Hello container as a basic that logs the contents of CloudEvents.

Let's enable the required services for the Cloud Run:

gcloud services enable run.googleapis.com

Deploy the Hello container:

REGION=us-central1

SERVICE_NAME=hello

gcloud run deploy $SERVICE_NAME

--allow-unauthenticated

--image=gcr.io/cloudrun/hello

--region=$REGION

Once the deployment is complete, you should see the status like this:

Alright! time for a quick test of the URL. Hit the URL from the browser to see the deployment status:

Great! It works!

Event Discovery

You may learn about the event sources in Eventarc, the kinds of events they can produce, and how to set up triggers to ingest them before you start generating triggers. To understand more on these commands, you can visit the official documentation from google which provides updated information on the commands:

https://cloud.google.com/sdk/gcloud/reference/eventarc

Creating a PubSub Trigger

Using Cloud Pub/Sub is one method of receiving events. Any application can send messages to Pub/Sub, and Eventarc can transmit these messages to Cloud Run. Let's head to the console and create a trigger.

Before creating any triggers, we need to enable a few services for eventarc. Let us enable the services for apis, create and configure the service account and then create and configure the trigger. Here are the commands:

#Enable the API services

gcloud services enable eventarc.googleapis.com

#Create and configure service account

SERVICE_ACCOUNT=eventarc-trigger-sa

gcloud iam service-accounts create $SERVICE_ACCOUNT

#Create and configure the trigger

TRIGGER_NAME=trigger-pub-subz

gcloud eventarc triggers create $TRIGGER_NAME

--destination-run-service=$SERVICE_NAME

--destination-run-region=$REGION

--event-filters="type=google.cloud.pubsub.topic.v1.messagePublished"

--location=$REGION

--service-account=$SERVICE_ACCOUNT@$PROJECT_ID.iam.gserviceaccount.com

Let us test this deployment now.

#Test the configuration

TOPIC_ID=$(gcloud eventarc triggers describe $TRIGGER_NAME --location $REGION --format='value(transport.pubsub.topic)')

gcloud pubsub topics publish $TOPIC_ID --message="Hello World"

The body of the incoming message is logged by the Cloud Run service. This is shown in your Cloud Run instance's Logs section:

Creating with an existing Pub/Sub Topic

Eventarc automatically generates a topic under the covers when you create a Pub/Sub trigger, which you can use as a transport topic between your application and a Cloud Run service. While it is helpful to quickly and simply establish a Pub/Sub backed trigger, there are situations when you might wish to use an already-existing subject. With the --transport-topic gcloud argument, Eventarc lets you provide an already-existing Pub/Sub topic within the same project.

Creating a topic:

TOPIC_ID=eventarc-topic

gcloud pubsub topics create $TOPIC_ID

Create a trigger:

TRIGGER_NAME=trigger-pubsub-existing

gcloud eventarc triggers create $TRIGGER_NAME

--destination-run-service=$SERVICE_NAME

--destination-run-region=$REGION

--event-filters="type=google.cloud.pubsub.topic.v1.messagePublished"

--location=$REGION

--transport-topic=projects/$PROJECT_ID/topics/$TOPIC_ID

--service-account=$SERVICE_ACCOUNT@$PROJECT_ID.iam.gserviceaccount.com

Let us send a test message and see if this really worked.

gcloud pubsub topics publish $TOPIC_ID --message="Hello again"

Great! That worked! There should be a message id that should get created.

Creating a Cloud Storage Trigger

When you create a trigger and start sending messages, there should be a listener or a receiver. Let us create a trigger to listen for the events from the Cloud Storage.

BUCKET_NAME=eventarc-gcs-$PROJECT_ID

gsutil mb -l $REGION gs://$BUCKET_NAME

The above commands mentioned will create a bucket to receive events.

We need to grant eventarc a role to receive... which is everntarc_eventReciever sot that the service account can be used in a Cloud Storage Trigger:

gcloud projects add-iam-policy-binding $PROJECT_ID

--role roles/eventarc.eventReceiver

--member serviceAccount:$SERVICE_ACCOUNT@$PROJECT_ID.iam.gserviceaccount.com

In order to use Cloud Storage triggers with the Cloud Storage service account, you must further add the pubsub.publisher role:

SERVICE_ACCOUNT_STORAGE=$(gsutil kms serviceaccount -p $PROJECT_ID)

gcloud projects add-iam-policy-binding $PROJECT_ID

--member serviceAccount:$SERVICE_ACCOUNT_STORAGE

--role roles/pubsub.publisher

Great! we created a bucket and created a service account with the role as well. Let's create a trigger to route the new file creation events from the bucket to the service.

TRIGGER_NAME=trigger-storage

gcloud eventarc triggers create $TRIGGER_NAME

--destination-run-service=$SERVICE_NAME

--destination-run-region=$REGION

--event-filters="type=google.cloud.storage.object.v1.finalized"

--event-filters="bucket=$BUCKET_NAME"

--location=$REGION

--service-account=$SERVICE_ACCOUNT@$PROJECT_ID.iam.gserviceaccount.com

Let us take a look at all the triggers we created

gcloud eventarc triggers list

That gives us all the details about the triggers and their status.

Let's upload the file to the Cloud Storage Bucket:

echo "Hello World" > random.txt

gsutil cp random.txt gs://$BUCKET_NAME/random.txt

To check what happened when we ran this command, we can check the logs of the Cloud Run service in the cloud console. we should be able to see this event received.

One last part before we run to the GUI of the eventarc. Creating an audit log logs trigger and testing the same.

Create a Cloud Audit Logs trigger

In this step, you establish a Cloud Audit Log trigger to perform the same thing, even though the Cloud Storage trigger is the better approach to listen for Cloud Storage events.

You have to enable Cloud Audit Logs before you can get events from a service. Select Audit Logs and IAM & Admin from the Cloud Console's top left menu. Go to the services list and look for Google Cloud Storage.

Make sure Admin, Read, and Write are selected on the right-hand side, then click Save:

Once this is set, let us create a trigger to route new file creation events from bucket to our service:

TRIGGER_NAME=trigger-auditlog-storage

gcloud eventarc triggers create $TRIGGER_NAME

--destination-run-service=$SERVICE_NAME

--destination-run-region=$REGION

--event-filters="type=google.cloud.audit.log.v1.written"

--event-filters="serviceName=storage.googleapis.com"

--event-filters="methodName=storage.objects.create"

--event-filters-path-pattern="resourceName=/projects/_/buckets/$BUCKET_NAME/objects/*"

--location=$REGION

--service-account=$SERVICE_ACCOUNT@$PROJECT_ID.iam.gserviceaccount.com

Let's quickly check if the triggers have been created:

Let us upload the same file we used earlier to the Cloud Storage Bucket again:

gsutil cp random.txt gs://$BUCKET_NAME/random.txt

Let us go to Cloud Run and check the received event:

Great! we can see the event successfully.

One last topic before we can conclude on this is the GUI...

The GUI

Let's head to the GUI to see what all we have created using the CLI.

That's how we can create a trigger from the GUI...

Learning from this project

Here is what we learned:

Vision of Eventarc

Discovering events in Eventarc

Creating a Cloud Run sink

Creating a trigger for Pub/Sub

Creating a trigger for Cloud Storage

Creating a trigger for Cloud Audit Logs

The Eventarc UI

Last but not least, make sure that we shutdown the resources.

Happy learning!